Three Threats

to the Survival of New Media

As this talk is given on the occasion of REFRESH!, the first international conference on the histories of art, science, and technology, let's start with a word about historians.

To be a historian of new media art is a precarious career choice. It's a lot easier to be a historian of old media, if only because the artists you study are no longer likely to turn around and contradict your theories; Picasso historians had a much easier time talking about his work once he was pushing up daisies instead of painting them. But if dead artists are a boon for historians, dead media are not. In fact, as soon as the media you study become obsolete, it's a bit of a stretch to call yourself a "historian of new media."

The bad news I have for new media historians is that the objects they study are in danger of evaporating out from under their studious gaze. On three separate battlegrounds, dinosaurs fattened on broadcast economies threaten to trample the newer species evolving in today's electronic networks. In some cases this attempt is deliberate: Microsoftus Rex and TimeWarnerSaurus have little interest in encouraging the unimpeded evolution of media. In other cases, as I hope to show, even new media advocates--including many of you in this room--unwittingly buy into hierarchic models of preservation, property, or professorship that endanger the unfettered evolution of digital art.

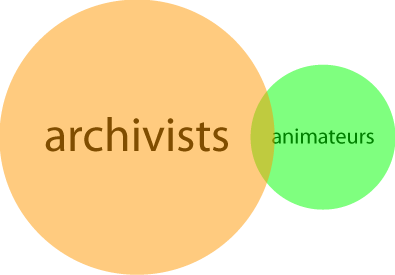

Let's start with preservation. The centralized storage strategy that has served as the default preservation paradigm for culture in the 18th through 20th centuries will utterly fail as the preservation paradigm for the 21st. Archivists specialize in keeping the works in their care as static as possible, but new media survive by remaining as mutable as possible.

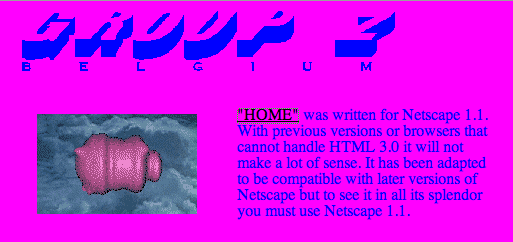

As an example, let's say an archivist had the foresight in 1994 to archive the Web project

HOME by Group Z on a Windows-formatted floppy disk.

- By 1995, the work no longer functioned with current browsers.

- By 1997, many of the external links will have expired.

- By 2002, you would be hard-pressed to find a floppy drive.

- By 2010, you may have trouble reading a disk formatted with Windows95.

- By 2015, the floppy will have demagnetized or delaminated.

In other words, to archive this work in a climate-controlled vault would be to condemn it to death.

Now, I don't mean we should go out and burn down the archives; some of my best friends are archivists. My experience suggests archived documentation, in the form of spoken or written accounts, photographs, and videos, is essential for the success of more forward-looking preservation strategies. But cloistered repositories like archives leave out an important part of the preservation equation. It does no good to archive the original score for Bach's Goldberg Variations if there's no one around who either knows how to play a harpsichord or knows how to transcribe the work for a piano.

For media of the Internet age, the only alternative to storing fragments that point to a foregone experience is to accept the necessity of remaking that experience even if the work changes in the process. If we are unwilling to accept a paradigm shift from fixed to variable media, only those works with a static end product will survive. Some screenshots, Iris prints, and a handful of text explanations -- what a meager memory the storage paradigm would leave of this fecund moment in art history. We must learn to supplement this strategy with more powerful methods such as migration, emulation, and reinterpretation. In short, we need animateurs.

Animateurs are those loony folks who re-enact historical moments, whether medieval jousting tournaments or the Wright brother's first flight. One of Internet art's first "historians," Robbin Murphy, once suggested that thinking about animateurs might help us understand what's missing in new media preservation, and I think he was right. Archivists minimize risks; animateurs take them on. Archivists wear white gloves; animateurs favor chain mail. In other words, animateurs are to archivists what astronauts are to astronomers.

That said, there are two types of animateurs, and we need both of them. The first tries to replicate the look and behavior of the original moment exactly, from bayonet to boot buckle. Ironically, to accomplish this the animateur's materials of manufacture--not to mention the backdrop--must change over time as uniforms wear to tatters and skylines once edged by trees are replaced by skyscrapers.

In new media, we call this preservation strategy emulation. The most ambitious test of this strategy to date was the Guggenheim's 2004 exhibition Seeing Double, organized by Carol Stringari, Caitlin Jones, Alain Depocas, and myself. This exhibition paired works still running on their original hardware--in this image, Grahame Weinbren and Roberta Friedman's Erl King from 1982--with emulated versions running on completely different hardware.

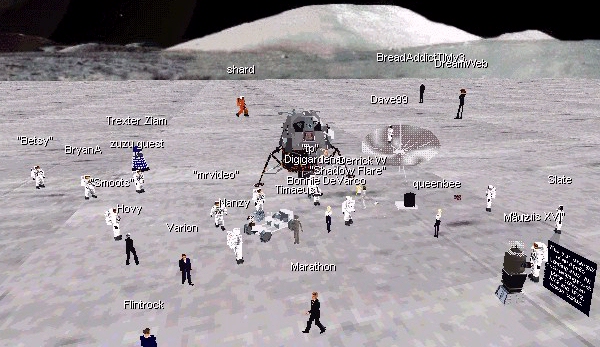

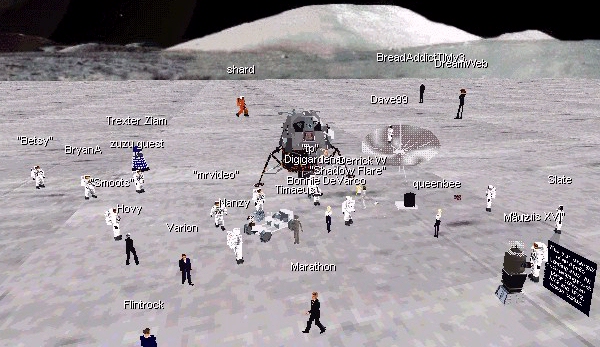

Other animateurs take a bit more license in their approach to historical re-creation. In new media, we call this preservation strategy reinterpretation, and it's potentially more powerful--if also more controversial--than emulation. Chris Salter noted Peter Sellar's provocative restaging of Stravinsky's Oedipus Rex--which of course was an operatic restaging of Sophocles' original play. Another group of animateurs commemorated the Apollo lunar landing in multi-user Virtual Reality.

Meanwhile, Internet artist Mark Napier, whose Java applet

net.flag allows users to remix a constantly shifting emblem for the Internet based on components chosen from the world's flags, recognizes that reinterpretation may be the only way to keep his work up-to-date when either Java or the flags it renders go out of date. Artist Olia Lialina goes further by actively soliciting and distributing

reinterpretations of her Web classic My Boyfriend Came Back from the War.

Being an animateur is no cakewalk. Apart from the technical skills required to, say, write a CPM interpreter, you have to be able to interpret the choices artists would have made about their media--say, how Nam June Paik feels about flat screens. That's one reason the

Variable Media Network has developed a questionnaire to survey the preferences artists have about how their own works should be re-created--if at all. Although we make the questionnaire prototype freely available to anyone who asks, we are also working to build an online version, so that anyone--especially potential animateurs--can access the data necessary to re-create works threatened by technological obsolescence.

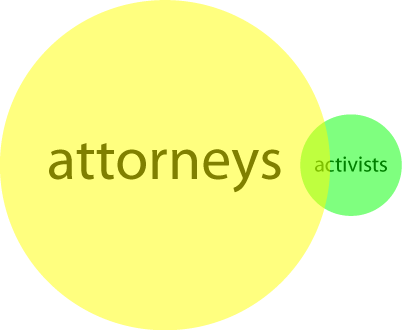

Musicians remixing each other, programmers hacking together an open code project, and activists organizing grass-roots campaigns depend on easy access to each other's time and labor. While networked creativity usually results from massaging one perspective or artwork against another, this frisson ironically depends on frictionless networks to supply the MP3 and Mov files for such transformations and recombinations.

Entrenched media monopolies, on the other hand, aren't interested in frictionless networks; their business model is based on maximizing the points of contact. Whenever a musician pays the producer, a producer pays the publisher, or a publisher pays rights holders, there's a lawyer with his hand out skimming cash from the exchange. In fact, the more friction in that exchange, the more money lawyers make--a fact you know if you've ever worked with lawyers in a negotiation.

So media conglomerates hire a lot of lawyers, and when they're not busy negotiating exchanges they keep themselves busy by suing Internet artists like etoy and Joy Garnett, remix composers like John Oswald and Negativland, and open software developers like Gnu/Linux. Intellectual property lawyers also saw fit to sue one of the sponsors of this conference on the grounds that the name of an Italian renaissance artist better suited a French financial company than a magazine devoted to art and science. As ridiculous as it sounds, the suit precipitated a raid by French police on the home occupied by Roger Malina's 80-year-old mother and 8-year-old son. While evidence of Leonardo's culpability was scarce, evidence that lawyers run amok on today's media landscape is plentiful.

We call sharing information "cheating" when it happens among people who aren't powerful, yet it is the dominant mechanism for people in power to stay that way, from museum curators to stockbrokers to academics. Yet Internet culture succeeded in such an explosive way because it was a system designed to supercharge the practice that in other fields is currently called cheating. Students naturally share information among themselves, using tools expressly designed by the greatest technical geniuses of our age for that express purpose. The Web, cell phones, instant messaging, email, peer-to-peer networks...these are all technologies for supercharging the circulation of ideas and culture.

Unfortunately, the definition of an institution is "an organization resistant to change," and universities are no exception. The dominant attitude toward sharing information in today's educational institutions is DON'T. For a glimpse at the appalling mismatch between education and intellectual property, look no further than the

classroom license approved by the General Council of the University of Texas at Austin.

If "keep your eyes on your own paper" made any sense in the age of Encyclopedia Britannica, it makes no sense in the age of Google, where value lies in not in memorizing facts to overcome information deficit but in connecting facts to cope with information overload.

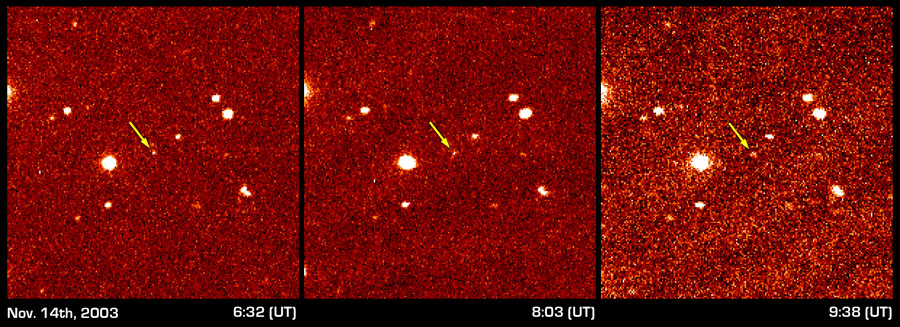

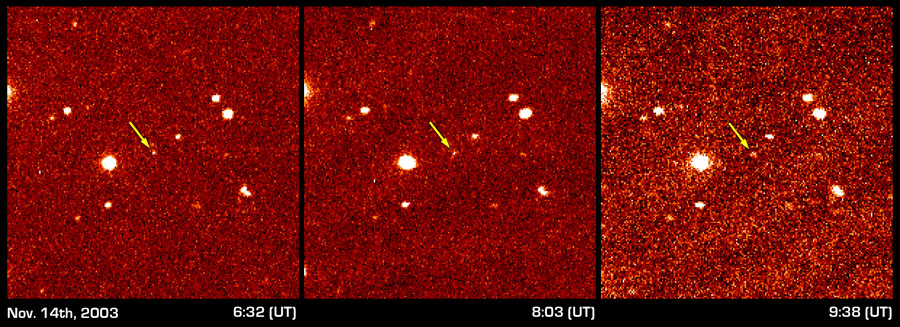

Some researchers have also suffered a foretaste of the impending train wreck between print and network cultures. In last July's EL61

scandal, Caltech astronomer Michael Brown emailed congratulations to a Spanish team for beating him to publish the discovery of a newly discovered planet beyond Pluto. Then a colleague perusing the server logs for Brown's observatory saw that the winning team had Googled into his online logs the day before they announced their discovery. This fiasco is more than an indictment of overzealous astronomers bent on glory. It is an indictment of a paradigm of hoarding information left over from the age of print. Even using the Internet the way it was intended becomes "academic dishonesty" when viewed through the lens of broadcast culture.

As if to compensate for the encroach of networked research on print scholarship, academic publishers like Elsevier have raised journal prices 200% in the past twenty years. As a result, professors can't assign their articles to their own students because their universities can't afford the subscriptions--and because, like filmmakers and musicians trying to play by the rules of broadcast culture, they sign away their copyright in a Faustian bargain with publishers.

An image of the discovery of Sedna, another Pluto-like object discovered by Michael Brown of Caltech.

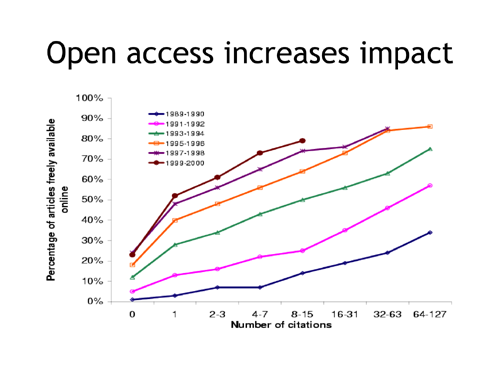

Researchers in the Open Access movement refuse that Faustian bargain. Instead they post free versions of their articles on their Web sites under a Creative Commons license. Or they deposit them in online repositories like the Public Library of Science. Or they publish in

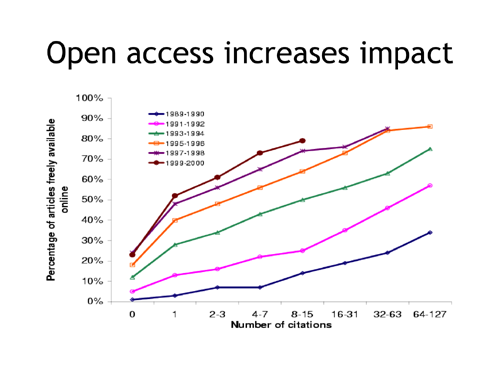

Open Access journals, where the citation impact is 50 to 250% greater than for articles in closed journals.

Of course, junior researchers often fear they won't get tenure if they don't publish in prestigious closed journals. But even scholars who publish in commercial journals can request the option to post pre- or postprints available to the Web or a free repository. The worst that can happen is the journal will reject their request, and a surprising number will consent to this practice.

A more long-term solution is to lobby university tenure committees for more enlightened measure of recognition than bean-counting print articles. Access advocate

Peter Suber and his

collaborators are working on a new metric that includes net-native measures like downloads and traffic. His work is supported by evidence that high number of downloads correlate with high number of citations.

Source: Steve Lawrence,

"Online or Invisible?" Nature, vol. 411, No. 6837 (2001) p. 521

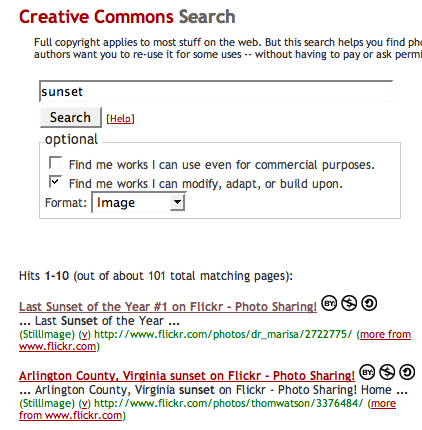

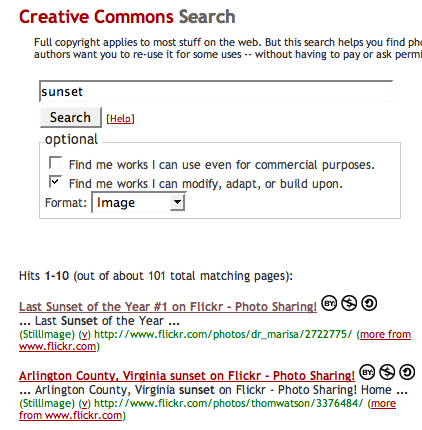

Open licenses such as Creative Commons and the GNU/GPL are one defense against the predation of commercial interests in scholarly and creative ecologies. But you can't re-use someone's art or software if you don't know where to find it. To help, the legal activists of Creative Commons have developed an XML

search engine that finds instances of open-licensed art online, as well as a

plug-in to the peer-to-peer application Morpheus that enables downloaders to see when an mp3 is tagged with a Creative Commons license.

These innovations are useful both as practical engines of discovery and as empirical evidence that technologies like peer-to-peer networks can be used for good. That said, open licenses alone only encourage the individual re-use of material; they don't help that individual establish any sort of collaborative relationship with actual people. In network culture, process is just as important as access.

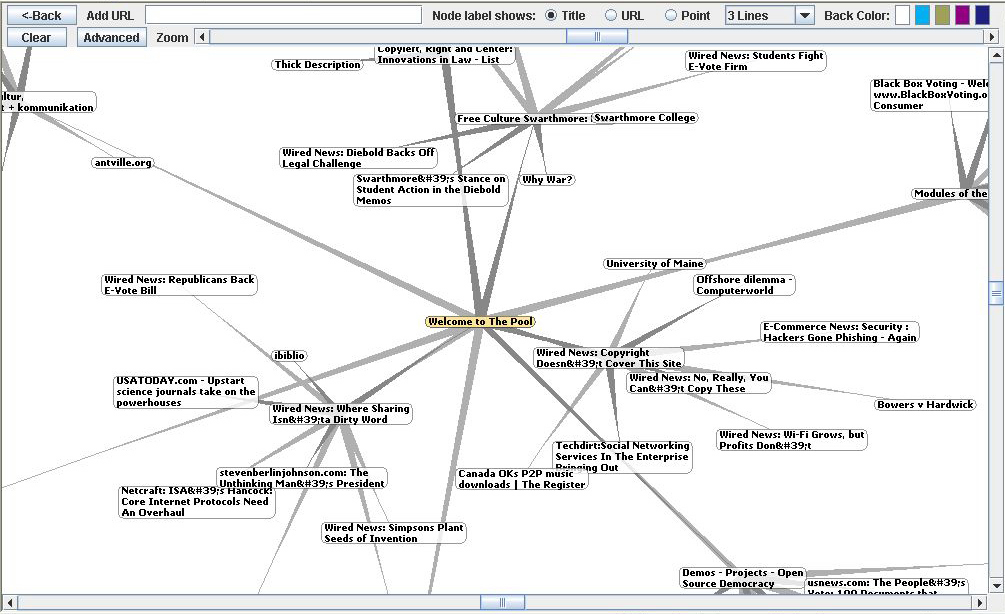

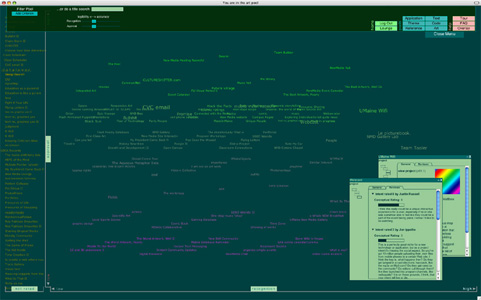

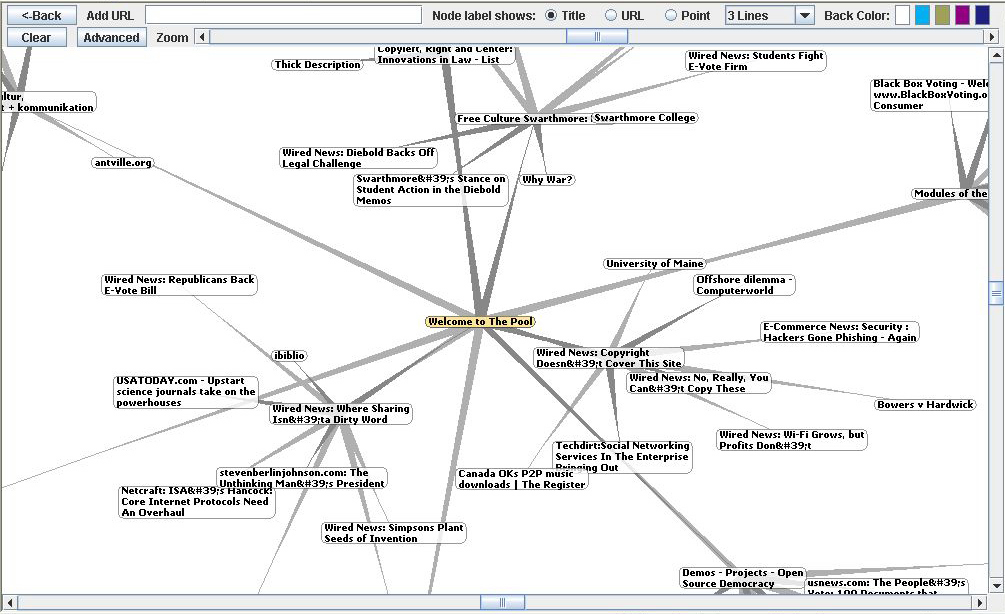

The Pool, an online environment for sharing art, text, and code, attempts to foster collaboration as well as consumption. Visitors to this site can locate projects by author, subject, or license terms. Contributors receive feedback at all stages of a project, from intent to approach to release. And the graph that emerges from this bottom-up process helps the best projects quite literally rise to the top.

Ever notice how many of the top new media critics are also new media artists? Here's just a sampling:

- Roy Ascott

- Jack Burnham

- Simon Biggs

- Manuel de Landa

- Sara Diamond

- Mary Flanagan

- Matthew Fuller

- Alex Galloway

- Ken Goldberg

- Natalie Jeremijenko

- Eduardo Kac

- Olia Lialina

- Patrick Lichty

- Lev Manovich

- Paul D. Miller

- Robbin Murphy

- A. Michael Noll

- Gordon Pask

- Simon Penny

- Rick Rinehart

- Trebor Scholz

- Jeffrey Shaw

- Peter Weibel

These folks discovered long ago that HTML and email could be used equally for creation or criticism. While other academics inked mute articles destined for dusty shelves, the salient new media thinkers of the past decade spent their time in boisterous videoconferences, heated email debates, and blogs a-buzz with realtime citations, conflicts, and collaborations. As Mark Taylor puts it, criticism can no longer be content with "the production of texts accessible to fewer and fewer people, which promote organizations and institutions whose obsolescence is undeniable."

Furthermore, new media applications and artworks can serve as powerful critical statements themselves; Matthew Fuller's essay on browser wars is good, but a few minutes surfing with his Web Stalker makes a much more powerful case for the limitations of Internet Explorer. That's because new media, whether art, text, or code, tend to enact rather than represent--a power Joline Blais, in our forthcoming book

At the Edge of Art, terms "executability." Executable media aren't content to sit in a classroom any more than on a pedestal or auction block. Cell phones are out there now, bringing down presidents. Digital fiction has dumbfounded surveillance networks, and speculative software has swayed court cases.

But things may be about to change, and not for the better. Look around you: how many artists are there at this conference? How many technologists? Will this conference help to tear down or build up the walls between theorists and practitioners?

For example, how many participants have you seen use network media in their presentations? Unless you're David Byrne, you have no excuse for using PowerPoint--a broadcast medium that would make Rupert Murdoch drool. Unconnected, unnavigable, and profoundly anti-social, PowerPoint is the academy's first defense against new media's threat to topple the ivory tower.

But academia has an even more powerful defense against change: an artificially restrictive measure of peer influence. In an academic context, "peer" means one of a handful of people familiar with your subdiscipline; to "influence" them is to publish a journal article that some fraction of this coterie might read. In a new media context, by contrast, influence is counted in seven digit-figures rather than two or three. To be a peer on Gnutella is to be sharing files with a million fellow users. If you only get a hundred hits on your Web site, you might as well throw in the towel and break out the oil paints. Viewed in this light, the academic definition of "peer influence" sounds a lot like the Monty Python

"Royal Society for Putting Things on Top of Other Things"--a reminder that one of the synonyms for the word "academic" is "irrelevant."

But that doesn't stop academics from applying their restrictive view of the world to new media. To a tenure committee, Lev Manovich is a guy who wrote one book, Christiane Paul has a part-time job at a museum, and jodi is two artists who've had only four gallery shows between them. Yet they would be any new media cognoscente's list of the most influential scholars, curators, and artists.

One way to counter the academic co-optation of new media is to establish a net-native metric. But we also don't want new media art to be completely Napsterized or Googlified--measured purely by volume--because then a rara avis may get lost in the global jungle. A case in point is the Wikipedia "vote for deletion" page on the artist's language Mezangele. Mezangele is well known inside the Internet art community, but one of the many votes for deletion argued that there were only 100 hits for "Mezangelle net.art".

We don't want the cultural significance of an online artifact to be reduced to a popularity contest, or the history of new media will be one of Nigerian bank scams and Paris Hilton videos. Yet we can't fall back on traditional academic criteria either. We need a new metric.

For starters, we'll need a wider range of input data. Google's Pagerank algorithm is based primarily on hyperlinks, whose weaknesses include their relative ease (think Googlebombing), their lack of metadata (are you linking to it because it's great or because it stinks?), and their potential bias (stereotypically, male bloggers make many, weak links while women make fewer, stronger ones). Nevertheless, given that articles are only one form of new media production among many, links are a more universal basis for recognition than formal citations.

To get a more nuanced understanding of a researcher's role in various research communities may require gathering more metadata about her links to other researchers. One approach is to roll your own recognition network, such as The Pool. Because users have to register to use it, The Pool is a good source of well-formed metadata to work from; yet ultimately it's a centralized approach, even if it's more registry than repository. On a more distributed front, the

blogging community--frustrated with raw link ranks like the Technorati 100--has begun to think collectively about

alternative metrics, such as adding metadata to links that can be harvested by various research discovery tools.

The other half of the problem is the output traditional metrics deliver. Any ranked list is a hierarchy, and as such fundamentally at odds with new media. A list of artists or academics with numbers next to their names is a pitiful representation of their impact on the field. Ultimately, ranked lists are, like standardized tests and representative democracy, a convenient excuse for not thinking.

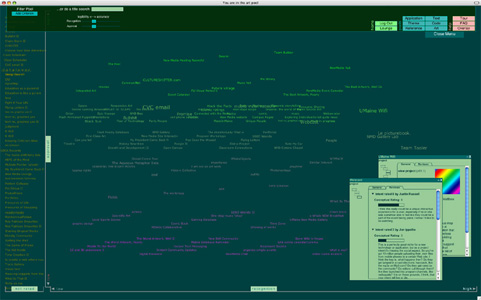

To defeat rankism in the output of such metrics may require abandoning lists altogether in favor of clouds. Unlike ranked lists, clouds of influence can be contextual (relative to the subculture being measured), multiple (applicable to more than one subculture), variable (reflecting changes over shorter timescales than a global metric), and net-native. Del.icio.us or Connotea tag clusters and Touchgraph link diagrams might be repurposed to create distributed metrics. One of The Pool's mechanisms for charting influence is Jerome Knope's

Collaborative Network Grapher, which relates people indirectly through their collaborations in The Pool.

What you can do to counter these threats

- Link to work you respect

- Insist on open access

- Contribute to the discussion

- Wipe PowerPoint from your harddrive

Insist on open access:

Contribute to the discussion:

Wipe PowerPoint from your harddrive:

- Make a simple Web site in 3 minutes for free with Blogger.

- Make a fancier Web site for free with Plone.

- Learn HTML yourself for free at W3 Schools.

- Re-use the code used in this site:

- Expanding menu at left (JavaScript).

- Styles for this page (CSS).

- HTML (just download this page using your browser's "Save As" function).

As this talk is given on the occasion of REFRESH!, the first international conference on the histories of art, science, and technology, let's start with a word about historians.

As this talk is given on the occasion of REFRESH!, the first international conference on the histories of art, science, and technology, let's start with a word about historians.

Let's start with preservation. The centralized storage strategy that has served as the default preservation paradigm for culture in the 18th through 20th centuries will utterly fail as the preservation paradigm for the 21st. Archivists specialize in keeping the works in their care as static as possible, but new media survive by remaining as mutable as possible.

Let's start with preservation. The centralized storage strategy that has served as the default preservation paradigm for culture in the 18th through 20th centuries will utterly fail as the preservation paradigm for the 21st. Archivists specialize in keeping the works in their care as static as possible, but new media survive by remaining as mutable as possible.

Now, I don't mean we should go out and burn down the archives; some of my best friends are archivists. My experience suggests archived documentation, in the form of spoken or written accounts, photographs, and videos, is essential for the success of more forward-looking preservation strategies. But cloistered repositories like archives leave out an important part of the preservation equation. It does no good to archive the original score for Bach's Goldberg Variations if there's no one around who either knows how to play a harpsichord or knows how to transcribe the work for a piano.

Now, I don't mean we should go out and burn down the archives; some of my best friends are archivists. My experience suggests archived documentation, in the form of spoken or written accounts, photographs, and videos, is essential for the success of more forward-looking preservation strategies. But cloistered repositories like archives leave out an important part of the preservation equation. It does no good to archive the original score for Bach's Goldberg Variations if there's no one around who either knows how to play a harpsichord or knows how to transcribe the work for a piano. Animateurs are those loony folks who re-enact historical moments, whether medieval jousting tournaments or the Wright brother's first flight. One of Internet art's first "historians," Robbin Murphy, once suggested that thinking about animateurs might help us understand what's missing in new media preservation, and I think he was right. Archivists minimize risks; animateurs take them on. Archivists wear white gloves; animateurs favor chain mail. In other words, animateurs are to archivists what astronauts are to astronomers.

Animateurs are those loony folks who re-enact historical moments, whether medieval jousting tournaments or the Wright brother's first flight. One of Internet art's first "historians," Robbin Murphy, once suggested that thinking about animateurs might help us understand what's missing in new media preservation, and I think he was right. Archivists minimize risks; animateurs take them on. Archivists wear white gloves; animateurs favor chain mail. In other words, animateurs are to archivists what astronauts are to astronomers. That said, there are two types of animateurs, and we need both of them. The first tries to replicate the look and behavior of the original moment exactly, from bayonet to boot buckle. Ironically, to accomplish this the animateur's materials of manufacture--not to mention the backdrop--must change over time as uniforms wear to tatters and skylines once edged by trees are replaced by skyscrapers.

That said, there are two types of animateurs, and we need both of them. The first tries to replicate the look and behavior of the original moment exactly, from bayonet to boot buckle. Ironically, to accomplish this the animateur's materials of manufacture--not to mention the backdrop--must change over time as uniforms wear to tatters and skylines once edged by trees are replaced by skyscrapers. In new media, we call this preservation strategy emulation. The most ambitious test of this strategy to date was the Guggenheim's 2004 exhibition Seeing Double, organized by Carol Stringari, Caitlin Jones, Alain Depocas, and myself. This exhibition paired works still running on their original hardware--in this image, Grahame Weinbren and Roberta Friedman's Erl King from 1982--with emulated versions running on completely different hardware.

In new media, we call this preservation strategy emulation. The most ambitious test of this strategy to date was the Guggenheim's 2004 exhibition Seeing Double, organized by Carol Stringari, Caitlin Jones, Alain Depocas, and myself. This exhibition paired works still running on their original hardware--in this image, Grahame Weinbren and Roberta Friedman's Erl King from 1982--with emulated versions running on completely different hardware.

Other animateurs take a bit more license in their approach to historical re-creation. In new media, we call this preservation strategy reinterpretation, and it's potentially more powerful--if also more controversial--than emulation. Chris Salter noted Peter Sellar's provocative restaging of Stravinsky's Oedipus Rex--which of course was an operatic restaging of Sophocles' original play. Another group of animateurs commemorated the Apollo lunar landing in multi-user Virtual Reality.

Other animateurs take a bit more license in their approach to historical re-creation. In new media, we call this preservation strategy reinterpretation, and it's potentially more powerful--if also more controversial--than emulation. Chris Salter noted Peter Sellar's provocative restaging of Stravinsky's Oedipus Rex--which of course was an operatic restaging of Sophocles' original play. Another group of animateurs commemorated the Apollo lunar landing in multi-user Virtual Reality.  Meanwhile, Internet artist Mark Napier, whose Java applet net.flag allows users to remix a constantly shifting emblem for the Internet based on components chosen from the world's flags, recognizes that reinterpretation may be the only way to keep his work up-to-date when either Java or the flags it renders go out of date. Artist Olia Lialina goes further by actively soliciting and distributing reinterpretations of her Web classic My Boyfriend Came Back from the War.

Meanwhile, Internet artist Mark Napier, whose Java applet net.flag allows users to remix a constantly shifting emblem for the Internet based on components chosen from the world's flags, recognizes that reinterpretation may be the only way to keep his work up-to-date when either Java or the flags it renders go out of date. Artist Olia Lialina goes further by actively soliciting and distributing reinterpretations of her Web classic My Boyfriend Came Back from the War.

Musicians remixing each other, programmers hacking together an open code project, and activists organizing grass-roots campaigns depend on easy access to each other's time and labor. While networked creativity usually results from massaging one perspective or artwork against another, this frisson ironically depends on frictionless networks to supply the MP3 and Mov files for such transformations and recombinations.

Musicians remixing each other, programmers hacking together an open code project, and activists organizing grass-roots campaigns depend on easy access to each other's time and labor. While networked creativity usually results from massaging one perspective or artwork against another, this frisson ironically depends on frictionless networks to supply the MP3 and Mov files for such transformations and recombinations. We call sharing information "cheating" when it happens among people who aren't powerful, yet it is the dominant mechanism for people in power to stay that way, from museum curators to stockbrokers to academics. Yet Internet culture succeeded in such an explosive way because it was a system designed to supercharge the practice that in other fields is currently called cheating. Students naturally share information among themselves, using tools expressly designed by the greatest technical geniuses of our age for that express purpose. The Web, cell phones, instant messaging, email, peer-to-peer networks...these are all technologies for supercharging the circulation of ideas and culture.

We call sharing information "cheating" when it happens among people who aren't powerful, yet it is the dominant mechanism for people in power to stay that way, from museum curators to stockbrokers to academics. Yet Internet culture succeeded in such an explosive way because it was a system designed to supercharge the practice that in other fields is currently called cheating. Students naturally share information among themselves, using tools expressly designed by the greatest technical geniuses of our age for that express purpose. The Web, cell phones, instant messaging, email, peer-to-peer networks...these are all technologies for supercharging the circulation of ideas and culture. If "keep your eyes on your own paper" made any sense in the age of Encyclopedia Britannica, it makes no sense in the age of Google, where value lies in not in memorizing facts to overcome information deficit but in connecting facts to cope with information overload.

If "keep your eyes on your own paper" made any sense in the age of Encyclopedia Britannica, it makes no sense in the age of Google, where value lies in not in memorizing facts to overcome information deficit but in connecting facts to cope with information overload.

Open licenses such as Creative Commons and the GNU/GPL are one defense against the predation of commercial interests in scholarly and creative ecologies. But you can't re-use someone's art or software if you don't know where to find it. To help, the legal activists of Creative Commons have developed an XML search engine that finds instances of open-licensed art online, as well as a plug-in to the peer-to-peer application Morpheus that enables downloaders to see when an mp3 is tagged with a Creative Commons license.

Open licenses such as Creative Commons and the GNU/GPL are one defense against the predation of commercial interests in scholarly and creative ecologies. But you can't re-use someone's art or software if you don't know where to find it. To help, the legal activists of Creative Commons have developed an XML search engine that finds instances of open-licensed art online, as well as a plug-in to the peer-to-peer application Morpheus that enables downloaders to see when an mp3 is tagged with a Creative Commons license. These innovations are useful both as practical engines of discovery and as empirical evidence that technologies like peer-to-peer networks can be used for good. That said, open licenses alone only encourage the individual re-use of material; they don't help that individual establish any sort of collaborative relationship with actual people. In network culture, process is just as important as access.

These innovations are useful both as practical engines of discovery and as empirical evidence that technologies like peer-to-peer networks can be used for good. That said, open licenses alone only encourage the individual re-use of material; they don't help that individual establish any sort of collaborative relationship with actual people. In network culture, process is just as important as access.

But academia has an even more powerful defense against change: an artificially restrictive measure of peer influence. In an academic context, "peer" means one of a handful of people familiar with your subdiscipline; to "influence" them is to publish a journal article that some fraction of this coterie might read. In a new media context, by contrast, influence is counted in seven digit-figures rather than two or three. To be a peer on Gnutella is to be sharing files with a million fellow users. If you only get a hundred hits on your Web site, you might as well throw in the towel and break out the oil paints. Viewed in this light, the academic definition of "peer influence" sounds a lot like the Monty Python "Royal Society for Putting Things on Top of Other Things"--a reminder that one of the synonyms for the word "academic" is "irrelevant."

But academia has an even more powerful defense against change: an artificially restrictive measure of peer influence. In an academic context, "peer" means one of a handful of people familiar with your subdiscipline; to "influence" them is to publish a journal article that some fraction of this coterie might read. In a new media context, by contrast, influence is counted in seven digit-figures rather than two or three. To be a peer on Gnutella is to be sharing files with a million fellow users. If you only get a hundred hits on your Web site, you might as well throw in the towel and break out the oil paints. Viewed in this light, the academic definition of "peer influence" sounds a lot like the Monty Python "Royal Society for Putting Things on Top of Other Things"--a reminder that one of the synonyms for the word "academic" is "irrelevant." The other half of the problem is the output traditional metrics deliver. Any ranked list is a hierarchy, and as such fundamentally at odds with new media. A list of artists or academics with numbers next to their names is a pitiful representation of their impact on the field. Ultimately, ranked lists are, like standardized tests and representative democracy, a convenient excuse for not thinking.

The other half of the problem is the output traditional metrics deliver. Any ranked list is a hierarchy, and as such fundamentally at odds with new media. A list of artists or academics with numbers next to their names is a pitiful representation of their impact on the field. Ultimately, ranked lists are, like standardized tests and representative democracy, a convenient excuse for not thinking. To defeat rankism in the output of such metrics may require abandoning lists altogether in favor of clouds. Unlike ranked lists, clouds of influence can be contextual (relative to the subculture being measured), multiple (applicable to more than one subculture), variable (reflecting changes over shorter timescales than a global metric), and net-native. Del.icio.us or Connotea tag clusters and Touchgraph link diagrams might be repurposed to create distributed metrics. One of The Pool's mechanisms for charting influence is Jerome Knope's Collaborative Network Grapher, which relates people indirectly through their collaborations in The Pool.

To defeat rankism in the output of such metrics may require abandoning lists altogether in favor of clouds. Unlike ranked lists, clouds of influence can be contextual (relative to the subculture being measured), multiple (applicable to more than one subculture), variable (reflecting changes over shorter timescales than a global metric), and net-native. Del.icio.us or Connotea tag clusters and Touchgraph link diagrams might be repurposed to create distributed metrics. One of The Pool's mechanisms for charting influence is Jerome Knope's Collaborative Network Grapher, which relates people indirectly through their collaborations in The Pool.